Understanding how humans use physical contact to interact with the world is a key step toward human-centric artificial intelligence. While inferring 3D contact is crucial for modeling realistic and physically-plausible human-object interactions, existing methods either focus on 2D, consider body joints rather than the surface, use coarse 3D body regions, or do not generalize to in-the-wild images.

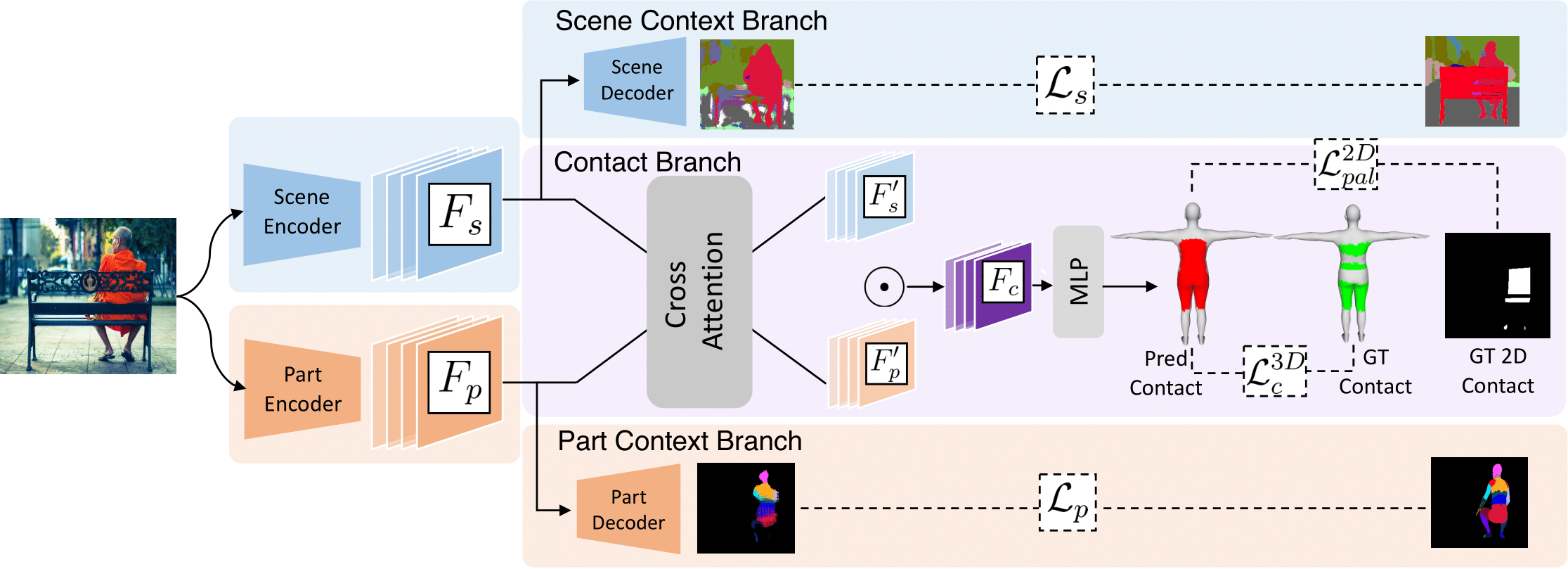

In contrast, we focus on inferring dense, 3D contact between the full body surface and objects in arbitrary images. To achieve this, we first collect DAMON, a new dataset containing dense vertex-level contact annotations paired with RGB images containing complex human-object and human-scene contact. Second, we train DECO , a novel 3D contact detector which uses both body-part-driven and scene-context-driven attention to estimate vertex-level contact on the SMPL body. DECO builds on the insight that human observers recognize contact by reasoning about the contacting body parts, their proximity to scene objects, and the surrounding scene context.

We perform extensive evaluations for our detector on DAMON as well as on the RICH and BEHAVE datasets. We significantly outperform existing SOTA methods across all benchmarks. We also show qualitatively that DECO generalizes well to diverse and challenging real-world human interactions in natural images.

DAMON (Dense Annotation of 3D HuMAn Object Contact in Natural Images) is a collection of vertex-level 3D contact labels on SMPL paired with color images of people in unconstrained environments with a wide diversity of human-scene and human-object interactions. The sourced images contain valid human-contact images from existing HOI datasets by removing indirect human-object interactions, heavily cropped humans, motion blur, distortion or extreme lighting conditions.

A total of 5522 images are annotated - contact vertices are painted and assigned an appropriate label out of 84 object and 24 body-part labels. The contact region for each object class has been color-coded for better visual representation. Specifically, two types of contact has been focused on: (1) scene-supported contact: humans supported by scene objects, and (2) human-supported contact: objects supported by a human.

Please click on the RGB images for a list of objects contacted with.

Font colors indicate the color of contact annotation on the displayed mesh.

Supporting contact is indicated in purple in the second mesh.

@InProceedings{tripathi2023deco,

author = {Tripathi, Shashank and Chatterjee, Agniv and Passy, Jean-Claude and Yi, Hongwei and Tzionas, Dimitrios and Black, Michael J.},

title = {{DECO}: Dense Estimation of {3D} Human-Scene Contact In The Wild},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {8001-8013}

}